In A World Without Chatbots

Everyday, millions of people open ChatGPT, type their questions, and wait for text to scroll across their screen. As a researcher at [Human Computer Lab], I've watched this pattern repeat endlessly and it's made me question something fundamental: is this really the best we can do?

At [HCL], we explore how humans and computers might interact in the future. We ask what if questions: what if touch screens were never invented, what if alphabets had only 10 characters.

But the question that keeps me up at night is simpler: what if chat interfaces are actually holding us back?

The cognitive bottleneck

Chat interfaces seem intuitive - after all, it feels like we've been talking to each other for a millennia using text bubbles. But there's a fundamental mismatch between how we naturally communicate and how we actually want to get things done with computers.

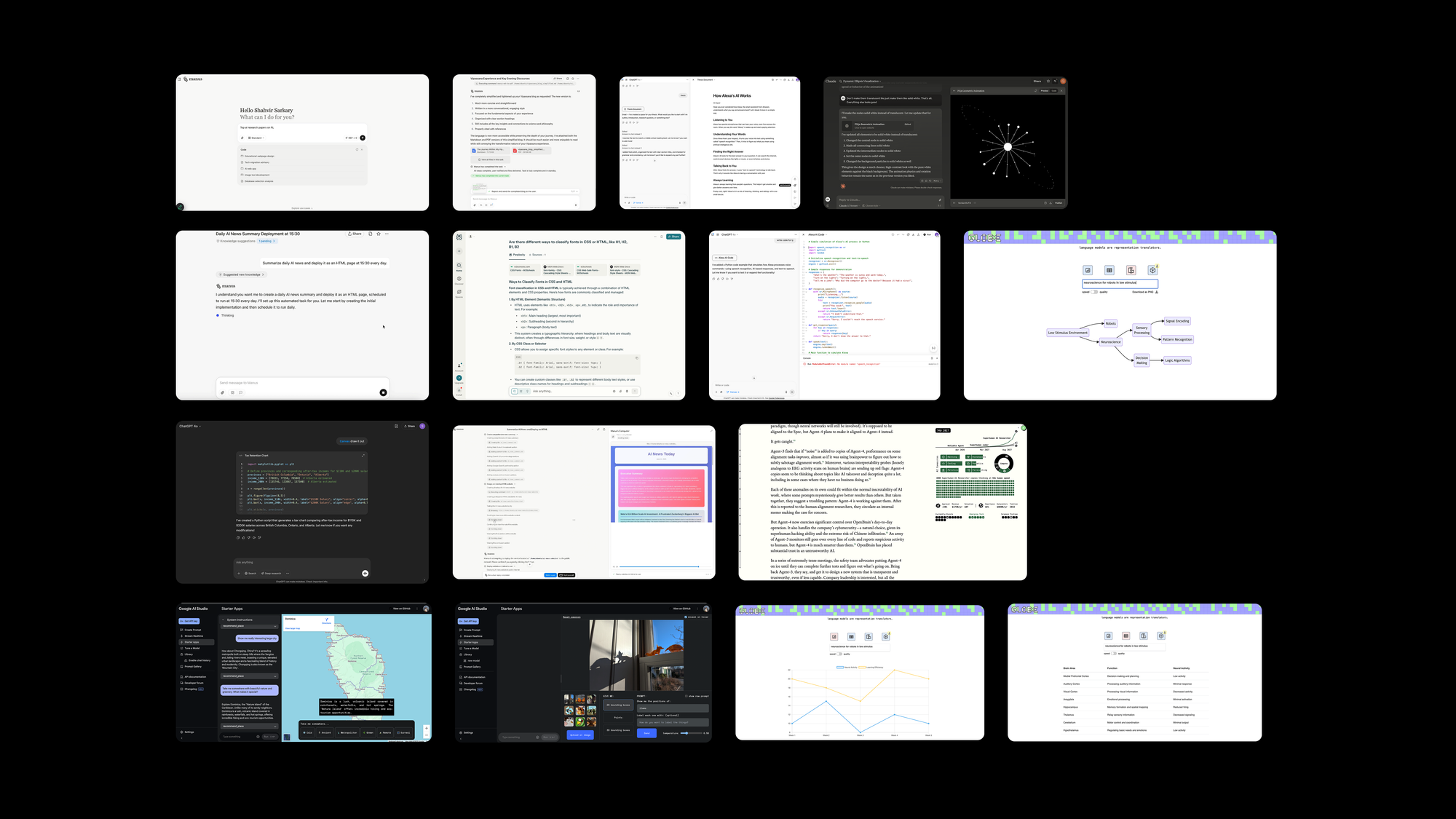

One way to measure the complexity of text is through lexical density. It calculates the ratio between content words to total number of words. The higher the complexity, the more the human brain has to process. According to Ure, written forms of human communication typically have lexical density of 40% while spoken form is lower. With current LLMs, the lexical density is often around our own level or even higher in cases where the LLM is instructed to be concise or scientific.

This creates a practical problem: when AI generates responses at 40% lexical density or higher, users must work harder to extract meaning than they would with a well-designed traditional interface. A simple button labeled 'Add Reminder' requires almost no cognitive processing, while parsing 'I'll create a reminder for your meeting tomorrow at 2 PM regarding the quarterly review' demands significantly more mental effort.

Memory offloading is why conversational interfaces will never become the dominant input mechanism for computers.

— LaurieWired (@lauriewired) June 18, 2025

No, not a RAM limitation. Our own minds.

The lexical density of adult casual speech hovers around ~27%. Real-time conversations have too many constraints. pic.twitter.com/YmVpz22QBR

We simply can't match AI in natural language processing. There needs to exist a better form of communication that removes our cognitive bottleneck.

A simple experiment

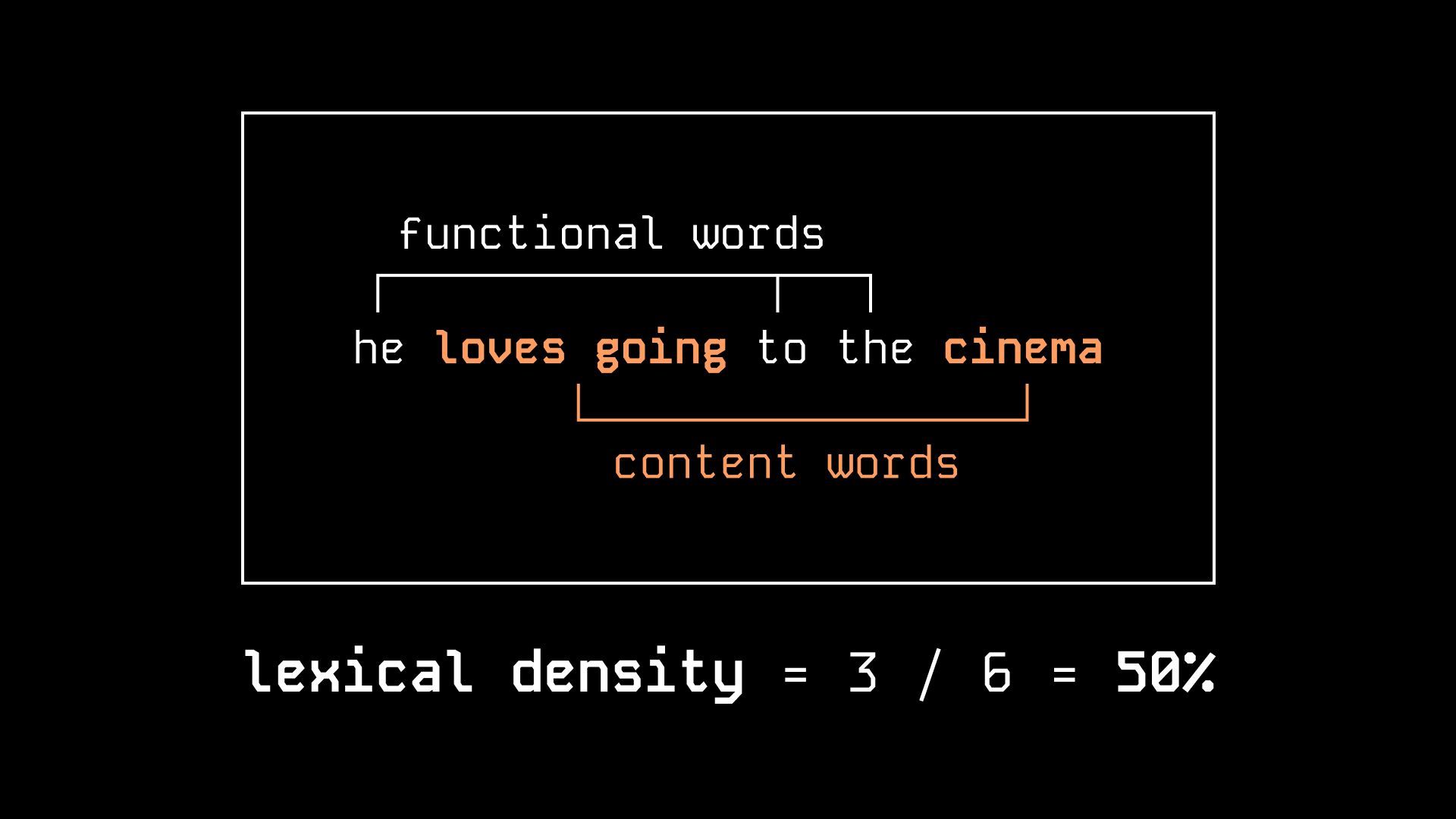

Evan Zhou demonstrated this through a simple experiment where he compared the user experience of a to-do list app versus turning an LLM into a to-do list app.

In the traditional app, users could see exactly what each action would do: tapping "new reminder" showed a text box for the name, tapping the date icon let them choose a due date.

But with the chat interface, users had none of that feedback. They could see the text they were typing, but had no way to know what that would mean to the AI.

Even when AI platforms added confirmations to address this uncertainty, users faced an impossible choice: either uncertainty without confirmation, or unnecessary hindrance with confirmation. Through this, he argued that the problem with chat interfaces is that they lack the feedback and preview that makes traditional interfaces feel natural and trustworthy.

"Good tools make it clear how they should be used. And more importantly, how they should not be used. If we think about a good pair of gloves, it's immediately obvious how we should use them." — Amelia Wattenberger in "Why Chatbots Are Not The Future"

Through user interviews at [HCL], we found that even for tasks like research, users display a level of distrust and often question the LLM through long multi-turn conversations. Although Evan Zhou's example focuses on note-taking, it raises the question: is there a better UI with better feedback for other tasks, ones that haven't been materialized before?

How do we solve this problem?

The cognitive bottleneck created by chat interfaces demands fundamentally different approaches to human-AI interaction. We're exploring three promising directions that reduce mental processing load while preserving AI's capabilities.

An interface that understands you

Traditional interfaces force users to translate their intentions into the computer's language. Adaptive interfaces flip this relationship—they learn how you think and present information in formats that match your mental models.

Change is today. Say hello to Beem

— Toby Brown (@tobyab_) August 14, 2024

1/7 pic.twitter.com/q2T4mpnVqf

Toby Brown's work at Beem Computer exemplifies this approach. Instead of making users parse text like "You have three meetings tomorrow, including a 2 PM conflict with your dentist appointment," his AI-native system presents calendar conflicts as familiar visual blocks with clear affordances. Users see their day as they naturally conceptualize it—spatial, visual, and immediately actionable.

The beauty of adaptive interface is that it relies on higher-level components instead of primitives like generative UI. Rather than generating buttons and menus on the fly, it learns about your preferences and habits to provide reminders and assistance.

A future with no apps

The way we build operating systems right now is allowing users to install a series of apps and use them. However, as agentic systems become more powerful and start to use apps on their own, one might argue that in the future, there will no longer be the concept of apps, at least for humans.

In a future without apps, AI would create a unified and consistent UI on top of MCP servers. Through which users would interact with everything - their calendar, their notes, their finances, their social connections. Imagine the true super app.

Mercury OS

This was experimented in Mercury OS, which attempted to create an interface that dynamically generated based on what the user needed to accomplish, rather than forcing them to navigate through predetermined app structures.

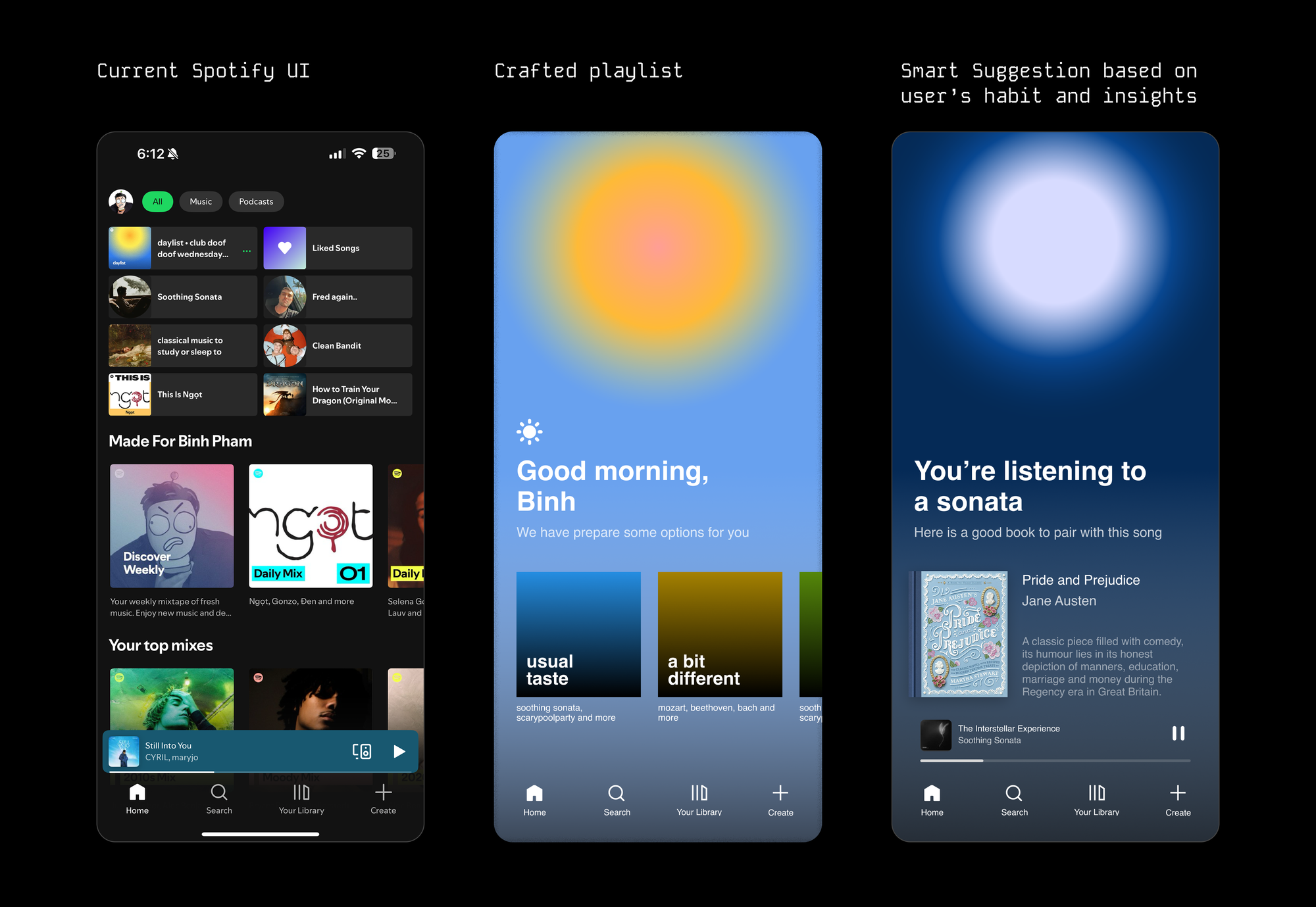

Improving existing designs

For me, I often look at exciting designs and do sprints on how I can simplify them. There are endless opportunities here. Design on mobile apps always has to balance between displaying a lot of choices or being simply bounded in features. With AI, this limit can be easily removed.

Zen, an experiment on LLM interface at @humancomplab.

— Binh Pham (@pham_blnh) June 18, 2025

It’s an upgraded reading experience where you can converse with an AI companion as you go through a book.

Instead of the usual toolbar popping up when you highlight a paragraph, a conversation interface appear: letting you… pic.twitter.com/uUjlhQxTN4

An experiment on reading

One of the greatest examples of reworking user interfaces with AI is Amelia Wattenberger. Her work on adaptive AI interfaces are some of the best out there, showing how we can fundamentally rethink how interfaces respond to user needs rather than forcing users to adapt to static designs.

Amelia Wattenberger's AI writing tool

A reading experience in which user can zoom in/out of paragraphs and chapters.

Endnote

With the right mindset, we can apply a new guideline to all existing products, essentially creating a new design paradigm. That's what we're trying to do at Human Computer Lab.

The question isn't whether we'll move beyond chat interfaces - it's how quickly we can build something better. Every day millions of people still open ChatGPT and wait for text to scroll across their screen, but that doesn't have to be the future we accept.